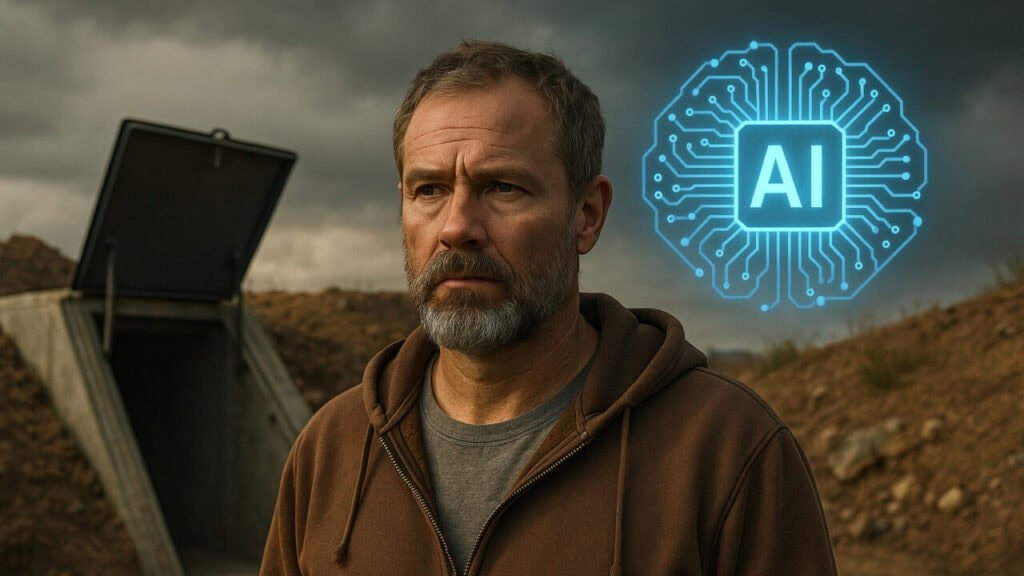

AI apocalypse super preppers are at the forefront of preparing—by building bunkers, quitting careers, and redesigning their lives—in anticipation of AI existential threats. The movement raises urgent questions about our collective response to rapidly advancing artificial intelligence.

In tech hubs like Silicon Valley, a new breed of individuals—called “AI apocalypse super preppers”—is emerging. These people are taking extraordinary steps, from purchasing land to build survival bunkers to abandoning conventional careers. Driven by both fear of AI-powered disasters and hope for control, they span diverse professions: rationalists, AI researchers, sex workers, entrepreneurs, and venture capitalists. Some focus on survival; others invest in experience or entrepreneurial escape plans.

The Irrational Logic Behind Bunker Mentality

For many, the idea of a misaligned superintelligence is not hypothetical—it’s a looming reality. One researcher known as “Henry” initially attempted to build a do-it-yourself bioshelter. After reconsideration, he decided to acquire land in California to develop a more permanent structure capable of sheltering friends and family in a potential AI-dominated future—one in which humans are subjugated yet alive in a “human zoo.” His story mirrors the anxieties of countless others who believe that preparation today could be the only guarantee of survival tomorrow.

From Hedonism to Survival—A Wide Spectrum

Not all plans involve underground bunkers and survivalist gear. A faction of these preppers embraces a hedonistic lifestyle—prioritizing joy, creativity, and experiences before possible collapse. Others double down on productivity and wealth—believing that accumulation and innovation might offer a semblance of control over an uncertain future. Still, a skeptical few warn against alarmism and divisive fear-mongering, reminding us that over-preparation can sometimes create the very panic it seeks to avoid.

Entrepreneurship Born from Fear

Fear, in this context, propels innovation. Startups and individuals like Fonix and Ross Gruetzemacher harness existential anxiety to fuel business ventures. They operate in the uncertain space where AI risk intersects with opportunity—turning anxiety into actionable solutions and products. For them, being an AI apocalypse super prepper is not just a defensive act, but also a chance to build the next generation of companies that might thrive in uncertain times.

Societal Ripples of Existential Prepping

This emerging culture reflects a broader societal fixation with existential risk. Concern over AI’s capability to trigger global catastrophe—and cultural manifestations like “P(doom)” (the probability of doom from AI)—highlight a growing anxiety among experts and laypeople alike. Yet, many believe preparedness shouldn’t just mean bunkers—but also meaningful engagement in safety research and proactive governance. The AI apocalypse super preppers movement therefore raises a central question: are we investing enough in shared resilience, or are we leaving survival only to those with the resources to prepare individually?

Lessons: How to Make the Most of AI Prepping (Responsibly)

Consider these nuanced approaches:

• Balance awareness with skepticism: Understand AI risks without succumbing to paralyzing fear.

• Focus on alignment, not escape: Few tech leaders believe survival bunkers are the answer—true safety lies in aligning AI with human values.

• Invest in safety research: Support technical and governance frameworks designed to mitigate catastrophic risks, not just personal survival plans.

• Strengthen communities: Collective resilience is more effective than isolation; cooperation could be the most powerful form of “prepping.”

As public anxiety over AI grows, the story of AI apocalypse super preppers reveals more about ourselves than the machines—they reflect our deepest fears, our capacity for innovation, and our uncertainty about the future. Perhaps the most powerful prepper tool remains our collective imagination and responsibility. If society can channel this energy into constructive AI governance, safety research, and inclusive dialogue, the phenomenon of AI apocalypse super preppers might evolve from paranoia into progress. For more stories like this, check out our AI section.

Source: Business Insider, Wikipedia

Did you enjoy the article?

If yes, please consider supporting us — we create this for you. Thank you! 💛